Relationships

Relationships is a theme that examines how the connection between humans and AI will evolve as the technology advances. From human-machine collaboration and cohabitation to consumer expectations for AI that is fallible and learns over time, our relationship with technology is moving from agent to assistant to companion and beyond.

Relationships

Forging bonds with AI

According to Charles Lee Isbell Jr., Dean of Computing at Georgia Institute of Technology, for something to truly be intelligent, in a way that is meaningful, it has to be intelligent with people, not intelligent like people.

Fallibility is not a one-way street. The industry frequently talks about teaching AI tricks about human beings, but what about the reverse? The fallibility of the end user, and how best to limit their own manipulations and or exploitations of AI must also be considered.

The relationship between humans and AI services and devices should be viewed not in the literal sense, but as a metaphor that describes two-way interaction, collaboration and the exchange of information and understanding. This distinguishes AI from earlier technologies, as we now work in tandem with these tools, learning from each other to achieve a common goal.

Artificial Intelligence Experience (AIX) is a concept that requires designers, developers, policymakers and end-users to share an understanding of the human-centric dimensions that must be considered for creating equitable, enjoyable and valuable AI products and services for end-users. This includes how we, as humans, might interact with technology that is becoming better at thinking and acting human and that begins to take a more meaningful role in our lives.

For Prof. Alex Zafiroglu, Deputy Director of the 3A Institute (3Ai) at the Australian National University, an anthropologist and formerly Intel’s foremost domain expert in homes and home life, end-users’ expectations need to be managed. She points out that machines are incredibly good at specific things but are limited to only that thing.

“They’re very good at maths, for example, and humans are very good at other things, including relationships with other human beings,” she says. “When we mix those two things up and we expect our computing systems to do the hard work of sociality and connections between people, we are making a mistake at the level of who has responsibility for actions in the world, particularly as it relates to relationships among people.”

Indeed, we are seeing the early steps towards technology taking more active roles in our daily lives, be it to vacuum a rug or taking on the cognitive task of digitally codifying the world. But most of these cases show AI focused on a singular task, which is a much more likely application of the technology for end-users to understand and relate. In this way, the idea of Artificial General Intelligence (AGI) is less likely and that these early instances of narrow AI should be imagined multiplied over and over to create emergent AI experiences.

-

Interview Jeff Poggi

Co-CEO, McIntosh Group

“Human-centric design for AI is really vital for the successful propagation of AI Into society. Right now I think humans are very comfortable being the masters of machines. We’ve designed machines to be our tools and our servants. But now it’s an interesting kind of moment in time where we’re looking at machines … to be our peers and potentially even to be our advisors.” Jeff Poggi Co-CEO of the McIntosh Group

One example of this is the Observatory for Human-Machine Collaboration (OHMC) at the University of Cambridge, which collaborated with domestic appliance firm Beko, to train a robot to prepare an omelette from scratch.

The optimum word here is train. The making of an omelette is based on narrow AI trained on data and repetition. But since it makes an omelet like a human, will end-users come to expect this robot to also make pancakes? Maybe. But what about making recommendations for music, healthcare treatments?

But AI applications will rarely be so specific. In the Levels of AIX Framework launched at CES 2020, various scenarios were used to help illustrate the increasing integration of AI in our lives. But we need to be comfortable with the technology in order for it to become useful.

Imagine, the weather forecast calls for snow and the AI alerts the family to dress warm, preheats the oven and orders ingredients to prepare their favourite meal.

Conversely, the car’s AI is an extension of the home and knows that the user is running late, suggests altering the usual route to ensure that an appointment is not missed and provides a calming environment.

Finally, a car’s AI interfaces with the smart city to experiment with different routes, departure times and driving speeds, optimizing journeys based on daily user objectives and other goals such as fuel efficiency or journey time.

These scenarios aren’t farfetched. In fact, they may happen sooner than we realize. What will be important to understand, however, is how AI will be designed to consider the end-user and the ways they will want to relate to technology that knows them evermore personally.

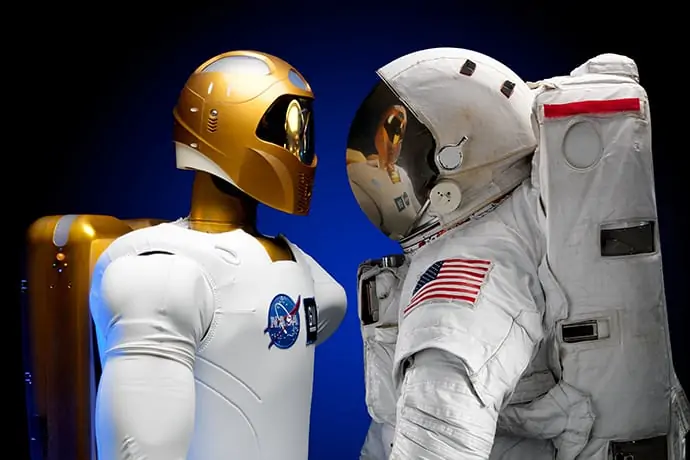

Collaboration

Better togetherWhether in the kitchen or the factory floor, human-machine collaboration is shaping how we perform tasks and make decisions. The question is how will it develop and continue to shape the future of work?

As AI matures, it will become more pervasive. We will see new specialized roles emerge for managing the new dynamics of AI, but eventually everyone will need to update their AI literacy to better collaborate with new technologies.

Most people can grasp the concept of an AI-powered recommendation algorithm and adjust their behaviour to affect the output of the algorithm. However, people have limited choice and only blunt tools for manipulating an algorithm to their needs.

When the different tooling and skill sets standardize along the value chain, it will vastly increase the choice and access to AI technology and engender far more innovation than we have yet seen with AI software.

To get to that point, we have a challenge of bridging the gap between proof-of-concept in the lab and real-world deployment. Researchers and engineers play an important role right now in helping close that gap, but they cannot do it alone. They, and the institutions that train them, need to focus on standardizing their tools and processes so that others can more easily collaborate down the value chain.

An AI robot working in a coal mine or car manufacturing plant completes certain tasks repetitively, which is not the same as the potential for human-machine collaboration, but it does create a base from where to start from and learn.

According to Charles Lee Isbell Jr., Dean of Computing at Georgia Institute of Technology, for something to truly be intelligent, in a way that is meaningful, it has to be intelligent with people, not intelligent like people. “We are all interesting creatures, not because we exist in a vacuum and we can just think on ourselves, we’re actually interesting because we interact with others,” he explains. “It’s actually how a lot of our learning happens, right? We learn by interacting with others, they get transferred knowledge generation to generation. And I think that that is sort of where the sweet spot is around AI.”

And when it comes to the developer and research community, we must key in on the fact that at a Level 3 and later in Level 4 of the AIX Framework, the concept of things like collaboration, user understanding and “defined spaces” is critically important.

When you are at work vs. at home it is important that the AI device understands the context of those places. Our relationships with AI in the future and our ability to have a cohabiting or co-working relationship that is positive will hinge on the ability of whatever device exists to be flexible.

As an example, humans generally behave and talk differently when they are at work versus when they are at home. The challenge is when those spaces start to converge, and there isn’t a clear definition between work life, home life and public life, what happens then?

Named as one of the world’s most influential women on the Ethics of AI in 2019, Dr Christina Colclough from The Why Not Lab is worried about such a future scenario. “Elaborate AI-driven systems where work and your private life, where these tools might begin to talk to one another and create more inferences. For me, this is an utterly scary scenario. It’s scary because it’s subjective, we’re becoming commodities.”

-

Interview Max Welling

VP Technologies at Qualcomm Technologies Netherlands B.V.

“Humans will have to work together with machines, running algorithms, certainly in the beginning, they become sort of tools that humans will use … but at some point … basically, everything you can think of will probably be revolutionized by machine learning in one way or the other. That’s my prediction.” Dr. Max Welling VP Technologies at Qualcomm Technologies Netherlands B.V.

Learning

The power of human-AI collaboration

How does an AI device learn about us and how do we learn about it? It is a fascinating question and the best way to answer it, for now at least, is to show what might occur. Humans can adapt, it is part of our very being, but while AI is now learning about us, we as a society are falling behind the learning curve for how this technology will impact us. More importantly, we must learn to leverage AI rather than the other way around.

“Well, if we assume that there’s going to be this massive influx of artificial intelligence in our private lives as citizens, as consumers, as workers as well, then of course, we’re going to need to learn, what questions to ask,” says Colclough. “But I think for the majority of ordinary citizens for ordinary workers we cannot even imagine the power and potential of these technologies. So, we don’t know what questions to ask. We don’t know what the threats to our privacy rights or human rights are.”

And what questions will AI need to ask about us?

When it comes to learning, a pivotal AI sub-category is Affective Computing (AC), described by Hayley Sutherland, senior research analyst of AI software platforms at IDC, as a combination of computer science, behavioural psychology and cognitive science. Sutherland stated in a blog released last year that AC uses hardware and software to identify human feelings, behaviours and cognitive states through the detection and analysis of facial, body language, biometric, verbal and/or voice signals.

Multidisciplinary approaches like this and the ones advocated by Prof. Zafiroglu at 3Ai allows AI system to be built not only to learn the affective signals of a human, but as the end-user, that same human would be involved in the entire learning process for how to best use what is essentially an intelligent tool. “We often find that when people are talking about AI, they are talking about super big systems and it sounds really big and scary, and it gets abstracted out to a level that you can’t really tell what the impact is going to be on the individual person that’s using the system, or it gets refined down so closely to an interaction between a human being and a device. You can’t begin to see the connections between that person and that device and then other systems that are also using that data. We [at 3A Institute] are filling a role right in the middle there, trying to draw the threads together between how data is being used in the world and the types of systems that are enabling and making that type of usage of data and the usage of those systems understandable and actionable. A wide variety of people that need to understand how that data is being used. We are training the next generation of practitioners to go out into the world and work in a variety of settings from policy to industry, to academia, to education at the non university level, to think tanks, to product teams, to strategy teams.”

Empathy

Press here for feelingsRosalind Picard, a computer scientist at MIT and co-founder of MIT Media Lab spin-off Affectiva, has stated that “If we want computers to be genuinely intelligent and to interact naturally with us, we must give computers the ability to recognize, understand, even to have and express emotions,” she says.

But the balance between how humans and AI interact is a delicate one given how human emotion often conflicts with logic. According to Jeff Poggi, Co-CEO of McIntosh Group, AIX design will be crucial for how empathy is applied in bridging human and AI interaction in the future. “Human centric design for AI is really vital for the successful propagation of AI Into society. Right now I think humans are very comfortable being the masters of machines. We’ve designed machines to be our tools and our servants. And that’s been since the industrial revolution. But now it’s an interesting kind of moment in time where we’re looking at machines to not just be our servants, but actually to be our peers and potentially even to be our advisors. “And so that’s a pretty major inflection point, I think, in the state of technology and also the relationships of people with that technology and how we interact with that. And humans are very sensitive beasts. We are very emotional. We have relationships with everything around us. So the idea of this sort of Human centric design for AI, I think is the right notion because we have to come at it from that perspective of how does it impact the person, the individual in an appropriate way, in order to build a fulfilling relationship between the man and the device.” According to Pegasystems, a Cambridge, Mass.-based company that specializes in cloud software for customer engagement and operational excellence, empathy is not about humans versus AI; it is about using the best of what both have to offer.

A reality check is contained in a major Pegasystems survey of 6,000 consumers from North America, the U.K,, Australia, Japan, German and France conducted about their views on AI and empathy.

Empathy, the company notes, is defined as the ability to understand and share the feelings of another, or simply as “putting yourself in someone else’s shoes. But are humans born with empathy or it learned? Half of the audience surveyed believes human beings are born with the capacity for empathy but must learn or be taught it.

“The future of AI-based decisioning is a combination of AI insights with human supplied ethical considerations.”

Empathy is when the AI senses the user is stressed about a job interview in their calendar. It offers to help them prepare by creating interview questions and providing feedback, while also setting the car route, the alarm and suggesting wardrobe options.

Finally, empathy is the non-verbal, and perhaps non-intentional interaction by the human with the system to direct the goals of the said system. Poggi illustrates it well in his interview: “I think an interesting, potential use case would be emotion capture. What is the emotion of the consumer? So if I am if I’m coming into the house, And I am in a relaxed mood, and I know that I’m in a relaxed mood cause I was just listening to some smooth jazz in the car on the way home from work and at a fairly low volume level, how do I sort of bring that mood of the user as they come into the home? How do I adapt lighting, music, blinds, you know, the whole home ecosystem can be adapted to the mood of the user based on how they’re entering the house? “One of the interesting concepts I think, is also to make AI then about the human or about the consumer, not about the device. So, the challenge for industry is, I may have AI in my refrigerator. I may have AI in my car. I may have AI in my phone, but I don’t want those devices to be controlling my experience. I want them to be working seamlessly together so that they can give me the optimal performance or the optimal benefit as an individual, regardless of sort of where I’m at and all the different devices around me.” However, to ensure this sort of emotional engagement happens it is apparent that some sort of safety net be in place and that lines be drawn to protect one member of the party, and one member only.

Recently, the European Parliament became one of the first political institutions to put forward recommendations on what AI rules should include with regards to ethics, liability and intellectual property rights.

Future laws, EU politicians said, should be made in accordance with several guiding principles, including: a human-centric and human-made AI; safety, transparency and accountability; safeguards against bias and discrimination; right to redress; social and environmental responsibility; and respect for privacy and data protection.

In addition, “high-risk AI technologies, such as those with self-learning capacities, should be designed to allow for human oversight at any time. If a functionality is used that would result in a serious breach of ethical principles and could be dangerous, the self-learning capacities should be disabled, and full human control should be restored.”

AIX design should consider how end-users want to engage with AI that is designed to understand them, anticipate their needs. It might not be practical to allow them to toggle on and off Ai’s ability to read and mimic our emotions if that’s their core purpose. What then would be the point of the technology? As an industry, we must work together as policymakers, researchers, developers and end-users to begin considering these questions.

Fallibility

Mistakes will be made, lessons will be learned

Like any relationship, there will be bumps in the road as we learn to work and live with AI systems and products, not least will be a fundamental shift in our expectations for technology to work how we expect from the moment we plug it in.

We are not talking about buying a smartphone, 4K television or any number of other technology and electronic devices that work out of the box. As humans, we are going to have to accept that certain AI applications need time to learn about us and that they will likely get things wrong.

Maybe this is palatable for people when it is a poor movie recommendation but as we automate more of our work, more of our health decisions, more of our interactions with human relationships, the stakes get higher and mistakes will be less acceptable by end-users.

How then can AIX design address people’s experiences with AI and their expectations?

“I try to think about how I am going to build a story that people will like, and how will I get them along the process so that they get the things that they actually accomplish and I can elicit from them what it is they’re trying to do,” says Isbell.

“When you think hard about this as a notion of experience, there’s an interesting trade-off, between giving people what they react to and they want, and helping people to come up with something new that they want, that they didn’t know that they actually wanted. And there is a trade off between making someone happy or feel as if they are happy and making someone better.”

Fallibility is not a one-way street. The industry frequently talks about teaching AI tricks about human beings, but what about the reverse? The fallibility of the end user, and how best to limit their own manipulations and or exploitations of AI must also be considered.

This becomes critical particularly in Level 3 or Level 4 of the AIX Framework where clear and distinct decisions are being made not by a human, but by AI that is revolutionizing what can be automated and the scale at which it can be deployed.

For example, a severe form of fallibility occurs when an AI device demonstrates bias, something which can and will create untold problems. If an AI system learns what you like and aligns with your biases, but those biases are not aligned with the rest of your household, with the developer’s intentions, with society, then is it broken?

Dr. Yuko Harayama, Executive Director of International Affairs at RIKEN and one of the initiators of Japan’s future-forward Society 5.0 may have an elegant solution to such potential issues of bias: “If society is mostly dominated by men, for example, they have their habits and their way of interaction and way of sharing permissions. And we [women] feel kind of [like an] outsider and have our own norms. And if we do not as a woman, adapt to their norms, it’s not so easy to really be listened to at the same level. [So] if it’s possible to have bias on one side, you can create a different bias on the opposite side. Why not experiment in this new way of designing things using positive biases?” Whether AI incorporates bias purposefully we must consider the consequential nature of decisions the AI is making and whether it should even be making that decision in the first place. Either way, our relationship with AI will be just like our relationship with each other, it’s going to take time and effort.

Interface

Common standards, platforms must come firstAs end-users of consumer AI assistants like Siri or Alexa, we are already learning new ways to interface with technology in ways that are meant to make it feel more personal, more human. The advances in speech and language processing in the last few years are changing the way we think and interact with AI. This is only the beginning.

As AI advances and becomes more embedded in our lives, the way we interface with these systems and products will directly affect how we understand, trust and interact with them. Some AI will act as collaborative tools with a need for direct engagement from the end-user while others will be purpose-driven, running in the background helping to automate our lives, predicting our needs.

Each application will need its own interface, some intuitive, some enjoyable and some perhaps, like body-language recognition, could be subconscious.

But the relationship between AI systems is also going to be an important component for how end-users engage with the technology and for the end experience.

In the IBM essay, Gabi Zijdervel, chief marketing officer and head of product strategy at Affectiva, believes bringing AI to its most useful state will require technologists to come together to establish common standards and platforms for AI: “As a consumer I’m not going to want to have to jump through hoops to get my phone to talk to my car, right?

“These are systems that should all be able to talk to each other, from the enterprise applications that we use in business to our mobile devices. Yet there are no standards for interoperability. I believe a consortium of industry will have to come together to solve this, cross-verticals and cross-use cases.”

Zijdervel touches on an interesting point of machine-machine relationships, the handshakes and the firewalls that will dictate how AI systems interact with each other. This will be especially relevant from the perspective of AI making up more of the “passive” environments we live in, and then our personal agents being able to act with the environments.

Relationships are Hard

Whether personal or professional, collaborating or cohabitating, AI systems, services and products are intertwining with our lives. Humans and machines are already collaborating in hospitals, in factories, helping us navigate traffic and even make dinner.

The successful integration of AI into the many aspects of our lives is going to hinge on our ability to accept, understand and trust the technology for what it is, a complex software. AI is essentially a tool, but it is unique in that it is a tool that will learn from us, predict our needs, make decisions, and explore with us. It will also make mistakes, but it will get better – and we need to be accepting of that.

Dr Max Welling, VP Technologies at Qualcomm Technologies Netherlands B.V. believes it is only a matter of time that AI will take over much of our tedious and complex work, and we will be glad for it, but to get there, we will still need to learn to collaborate. “What is interesting is that humans will have to work together with the machine, running algorithms, certainly in the beginning, they become sort of tools that humans will use … but at some point I think it will be mostly automated and it will be clear that the automated procedure is actually better than the procedure where the humans do the work, because it’s just too complex. Basically, everything you can think of will probably be prone to be revolutionized by machine learning in one way or the other. That’s my prediction.” The fear about AI replacing humans, either in the workplace or in general is still up for debate. However, we must prepare for a near-future that will require us all to work and live alongside AI, in partnership.

AIX design will become ever more important as all stakeholders in the development of AI – from the researchers and developers, to the policymakers and ultimately the end-user – consider the emotional barriers posed by humans and the need to design for purpose when considering the ways that humans and AI will collaborate. How do we ensure we have the skills? How do we design AI to be a good companion or co-worker? They say relationships are hard and require work to be successful.

We should assume the same is true with our AI relationships.

Adjacent Technologies

AI is developing fast, but it isn’t doing it alone. In fact, many technologies are advancing simultaneously, further enabling the advancement of AI and creating an exciting future full of possibility.