Context

Context deals with the environmental, cultural and personal nuances that need to be considered to ensure equitable and purpose-driven AI. Home, work, car and public spaces require different social rules, while individual personalities and values require AI that reasons, understands, explores and adapts.

Context

The age of reasoning

In order for consumers to appreciate the true benefits of artificial intelligence, it is imperative that developers embrace the contextual side of the equation. But what exactly does that mean?

To be sure, it is at this stage where AI goes beyond surface level interactions to actively perform not only the basic requirements, but also make recommendations such as what you should eat before a pending business meeting that could end up being stressful or suggesting the perfect restaurant with friends later that night.

What needs to be stressed here is that there are two distinct thought processes when it comes to defining artificial intelligence and context.

In a blog written last year, Oliver Brdiczka, an artificial intelligence and machine learning architect working with Adobe Sensei, the company’s AI and machine learning technology that connects to the company’s cloud and helps marketing professionals make more informed decisions, zeroed in on two fundamentally different scenarios – what now exists and what soon could be possible.

AI, he wrote, is powering more and more services and devices that we use daily such as personal voice assistants, movie recommendation services and driving assistance systems.

“And while AI has become a lot more sophisticated, we all have those moments where we wonder: Why did I get this weird recommendation? or Why did the assistant do this? Often after a restart and some trial and error, we get our AI systems back on track, but we never completely and blindly trust our AI-powered future.

-

Interview David Foster

Head of Lyft Transit, Bikes and Scooters

“What worries me is AI-based systems that are controlling critical things and are thrown into the market before they are fully either developed or regulated.” David Foster Head of Lyft Transit, Bikes and Scooters

“These limitations have inspired the call for a new phase of AI, which will create a more collaborative partnership between humans and machines. Dubbed “Contextual AI,” it is technology that is embedded, understands human context and is capable of interacting with humans.”

What it represents is a description for a more advanced and complex system, but the problem is it misses a step. In order to ultimately arrive at the Contextual AI Age, it is first imperative that AI understands context.

Sri Shivananda, Senior Vice President and Chief Technology Officer at PayPal, likens the emergence of context in AI to the influence mobile communications has had on society.

“We’ve just seen over the last decade how mobile has played a significant role in changing payment behavior and payment experiences,” he says. “As we go forward, design and user experiences will continue to play a critical role in how these experiences come about.

“Commerce is going to become contextual. It is going to be surrounding us where we are. It may be through a smart speaker interaction or a continued interaction with a desktop laptop or a mobile device, or for that matter with the car that you’re driving in. As commerce becomes contextual, AI has to become contextual as well.”

According to the Levels of AIX Framework, contextual AI will truly come to fruition in Level 3, when AI understands the patterns and principles across systems, using reasoning to predict and promote positive outcomes for users. This is termed, ‘causality learning’ and in order for AI to achieve this, it must be designed with end-users in mind and the many contextual components that shape our own experiences, as humans, namely the spaces, personalities and values that underpin the common-sense rules of our society.

Context can be defined in many ways, but at the end of the day it is about ensuring that AI can read all of the signs, be it the need to understand its environment or behave in appropriate ways depending on the situation.

Spaces

Drawing the line between work and home lifeHow people behave at home is fundamentally different than how they behave at the grocery store, at work or at the nightclub. We dress appropriately, act appropriately and experience differently based on certain assumptions and knowledge about the unwritten rules that are attached to these spaces.

COVID-19 has created a new normal where our homes now are also our office, our doctors’ clinic, our movie theatre and our bank. What are the implications of this? How should we be designing these separate AI systems in a way that they share, but do not share too much?

How spaces are used and utilized have changed because of the onset of the pandemic and AI developers and designers must reassess their assumptions. With the lines blurred between home life and work life, a great deal of thought must now be put into AIX design ensuring end-users are part of the process in defining how AI not only understands, but also acts to provide optimal user experiences based on their environment.

What needs to be considered as both AI-enabled services evolve is where will the line drawn between a home and work AI offering. Will they be mutually separate entities or somehow merge and contain the ability to communicate with each other? It may be a dilemma for some and an opportunity for others, but worth discussing now as we re-establish commonly held assumptions about our spaces.

David Foster, Head of Lyft Transit, Bikes and Scooters, believes Interoperability is key.

“AI is typically evolving today in isolated islands – most of our cars aren’t talking directly to our home and certainly aren’t talking to our home without our explicit inputs to that AI in the vehicle. I think ultimately that will happen, because we have to assume that AI is going to operate in a heterogeneous world with a lot of non AI-driven consumers or other devices or vehicles … so interoperability is going to be key. The ability to be adaptive and predictive and context aware is also going to be key. “People might be nervous about AI, talking unaided let’s say from our car to our home or to our work because of concerns around security or identity or intent. But using AI so that I can ask my car to turn on the lights or the heat, my house on the way home … I’m showing intent and I’m showing context. I think many people would be comfortable with that type of approach today.” As we design new artificial intelligence experiences for end-users, it is going to be evermore important to ensure we consider our environment and the contextual realities that we assume as humans within different spaces. But even more important will be the challenge of codifying our very human understanding of the world based on our sense of place and the behaviours and especially the information that we share between those spaces. When our work AI and home AI converge with our entertainment AI and our healthcare AI, there are some very serious implications about how data is shared across the systems, when it is shared and for what purpose. Developers and policymakers will need to consider the firewalls and handshakes that will need to happen, and end-users should be part of this process every step of the way.

-

Interview Alex Zafiroglu

Deputy Director, 3A Institute (3Ai)

“As an anthropologist and as someone that has worked in an advanced R&D technology company and worked on product teams, I would say, no, you’re never going to get a solution that works for all people at all times.” Alex Zafiroglu Deputy Director, 3A Institute (3Ai)

Values

AI systems must align with human ideals

Iason Gabriel is a senior research scientist on the ethics research team at DeepMind. Earlier this year he released a paper entitled Artificial Intelligence, Values and Alignment and in it maintains that the question of ‘value alignment’ centres upon how to ensure that AI systems are properly aligned with human values. It can be broken down into two parts. The first part is technical and focuses on how to encode values or principles in artificial agents, so that they reliably do what they ought to do. The second part is normative and focuses on what values or principles it would be right to encode in AI.

“Any new technology generates moral considerations. Yet the task of imbuing artificial agents with moral values becomes particularly important as computer systems operate with greater autonomy and at a speed that ‘increasingly prohibits humans from evaluating whether each action is performed in a responsible or ethical manner,” Gabriel wrote.

The first part of the paper notes that while technologists have an important role to play in building systems that respect and embody human values, the task of selecting appropriate values is not one that can be settled by technical work alone.

Part of the challenge with AI and values is that it is such a complex combination. On one hand you have software developers creating what surely will be the future, and yet on the other hand there are human beings, each with differing interests, emotions, intelligence and tastes.

Does our AI need to understand religion? Culture? Nationality? How will it learn our personal values and are those different from our household values?

At the Stanford University Institute for Human-Centred Artificial Intelligence co-director Fei-Fei Li continues to champion the importance of bringing diverse perspectives to the development of AI. Li is clear that while values can be imbued into AI, it is the humans and companies behind it that matter.

“When we design a technology, when we make it into a product, into a service, when we ship it to consumers, the whole process includes our values,” she told CNBC. “Or the values of the companies, or the solution makers.”

But values are not universal, are highly personal and can also change over time. How then can we design AI to understand the context of our human values and which ones? And how are the values of the developers and corporations imprinted in the AI?

Dr Christina. Colclough of The Why Not Lab has some important questions: “We have to understand that, inherent in all of these algorithms, are also values, norms but they’re values and norms of the country, the state, the area where the developers are from, who has created these tools or the company owners … When an American algorithm is applied in Kenya or in Estonia or in Germany, are we actually experiencing some form of digital colonialism? How do we ensure that any algorithm is very explicit around the values, the norms on which it is founded and also, how can it be adapted to the cultures of the local environment where they will be used. How do we make sure we don’t end up in this sort of nightmarish sort of social norms, social credit system where we’re all judged against algorithmic norms, which have been defined in a very few contexts?” Inevitably it will come down to the symbiotic relationship between values and ethics. We can consider that ethics are how we apply our values in building/using AI, they are the rules and standards that make our values tangible in the different inputs/interactions with the technology. Ethics seems to stop at protecting our values and ensuring they can be lived out and not infringed upon, but values extend into what we want to prioritize and prefer to invest in as humans.

Perhaps by applying AIX design and including as diverse a range of perspectives as possible into the development of these systems, we can help ensure the most equitable and valuable experiences as possible for end-users.

Purpose

Without trust from a human there is nothingA principal theme in Level 3 of the AIX Framework revolves around understanding. AI, at this point, understands the patterns and principles across systems to meet predefined missions.

Of note, AI shares learning outcomes to achieve a broader mission. Whatever that mission is. It could mean interpreting the mood of an individual at one end of the spectrum or helping society as a whole grow and learn at the other.

An example of a value defined as a purpose for AI occurred recently when EU consumer group Euroconsumers, published a white paper on how AI can be leveraged by consumers to accelerate Europe’s sustainability agenda.

- Two key recommendations came from the report:

- AI driven tools and complementary technologies can help power the sustainability transition in different industries including household utilities, food, mobility and retail

- There is currently a significant lack of trust and satisfaction in the consumer AI experience.

Companies developing consumer-facing AI services for the green and digital transition have a perfect opportunity to help people achieve their sustainability goals and demonstrate they can deliver on trustworthy AI at the same time.

There was also a stark warning for all AI developers: Trust is paramount.

“Without trust from the consumer, AI will not be able to achieve its true potential,” said spokesperson Marco Pierani. “It would only be detrimental. More now than ever, tech companies should maximize their efforts to create AI that would not only improve the lives of consumers, but society as a whole.”

For Alex Zafiroglu, Deputy Director at the 3A Institute, purpose speaks to the underlying problem that the AI is solving for the end-user. This purpose must align with our goals and this is the context that needs to underpin the AI’s understanding and functioning. “We need to have a usage roadmaps or experience roadmaps, particularly for thinking about an AI experience framework, such as the [AIX Framework]. And in that case, you need to think very critically about those humans, who are at the far end of the solution who are basically what the industry would call end-users. You also need to think about the value that is being generated by the application of artificial intelligence solutions in a particular context, because context is incredibly important. You need to think about, to what ends are you building solutions, both for those end-users and for your direct customer.”

Creativity

Onus is on both the developer and end-user

Creativity, says IBM may be the ultimate moonshot for artificial intelligence: “Already AI has helped write pop ballads, mimicked the styles of great painters and informed creative decisions in filmmaking.”

Experts, it states in a blog, “contend that we have barely scratched the surface of what is possible. While advancements in AI mean that computers can be coached on some parameters of creativity, experts question the extent to which AI can develop its own sense of creativity. Can AI be taught how to create without guidance?”

“Just a few years ago, who would have thought we would be able to teach a computer what is or is not cancer?” asks Arvind Krishna, senior vice president of hybrid cloud and director of IBM Research. “I think teaching AI what’s melodic or beautiful is a challenge of a different kind since it is more subjective, but likely can be achieved.

“You can give AI a bunch of training data that says, ‘I consider this beautiful. I don’t consider this beautiful.’ And even though the concept of beauty may differ among humans, I believe the computer will be able to find a good range. Now, if you ask it to create something beautiful from scratch, I think that’s certainly a more distant and challenging frontier.”

More purpose-driven AI, say when your personal AI starts mashing up with data from your fridge, your smart stove and the Uber Eats recommendations to provide creative ideas for lunch, are maybe a little way away. However, the idea that AI will be able to look beyond the obvious context of certain inputs and generate novel combinations and juxtapositions that create new contextual meaning for the end-user, is something that could be quite valuable, since it is already how we problem solve as humans.

How should developers consider the kind of creativity that sparks unexpected joy? Creativity that makes the type of predictions and unique insights that end-users find useful? Plenty that can be interesting that can be done just by changing what parameters the developers use and the way the end-user plays with them.

The interesting juxtaposition will be in the context that we see around AI creations. By playing with the context AI is able to understand we may see more interesting intentional creations across more dimensions of context.

The onus will be on the developer and the end-user to get it right.

Personality

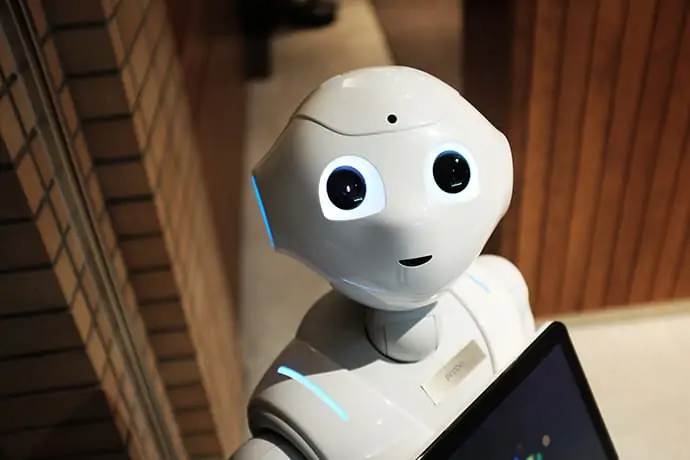

More a case of personalizationMita Yun, the co-founder and CEO of Zoetic AI, makers of the emotive companion robot Kiki, suggested in a recent blog that when we think of Alexa having a personality, we’ll describe it as her responding to certain things in a certain way – what she does or doesn’t do based on a question or request.

“That’s because Alexa doesn’t really have a personality. Almost every single AI or voice-based product does not have one. When The Food Network discusses how Rachael Ray’s “personality” will come through the voice of Alexa, what they mean is that they’re adding expressions in her voice.

Then again, when it comes to personality and context, there is an argument to be made here that it is not necessarily about personality as much as it is personalization. In that regard, there are questions to ponder, key among them what do developers of AI systems and applications need to think about as the technology starts to allow for much more personal, real-time engagement?

Just as humans interact through non-verbal cues, how can AI know and respond appropriately if we are annoyed, angry, sad, etc? How should it behave differently for different personalities? Rather than a home AI, a car AI, etc. should the future be more concentrated around personal AI? What are the dangers of that?

This is all about the context of people’s unique personality and using tried-and-true AIX Design methodologies that allow AI to take into consideration different people’s attitudes, beliefs and everything else that make up a personality.

There are a multitude of questions that must be answered such as how will an AI system serve one person differently than another and how can it understand that people have moods? How does the feedback loop shape our personality? What personality should we show if it is a point of feedback?

Feedback loops, writes Natalie Fletcher in this blog from AI company Clarifai, “ensure that AI results do not stagnate. This also has a significant advantage in that this data used to train new versions of the model is of the same real-world distribution that the customer cares about predicting over. Without them, AI will choose the path of least resistance, even when that path is wrong, causing its performance to deteriorate. By incorporating a feed loop, you can reinforce your models’ training and keep them improving over time.”

Certainly what must also be avoided is a repeat of the Cambridge Analytica-Facebook scandal, which used behavioural models based on the OCEAN model – an acronym that stands for openness, conscientiousness, extroversion, agreeableness and neuroticism that was first developed in the 1980s to assess an individual’s personality.

To that end, what worries David Foster, are AI-based systems that are controlling critical things and are thrown into the market before they are fully either developed or regulated in some sense or another. “I do still think that the human brain is a marvellous piece of computing equipment. And we do not quite fully understand all of the calculations that we are subconsciously making as we go about the world today. Therefore, how do we model those so that AI can make equivalently good decisions. And how do we model the decisions where there is no good answer to the problem?” And since everyone’s personality is different, a dose of reality is also needed both for now and in the future via this insightful observation from Alex Zafiroglu: “As an anthropologist and as someone that has worked in advanced R&D technology company and worked on product teams, I would say, no, you’re never going to get a solution that works for all people at all times.”

Context is Queen

While still a long way away from being truly contextual, a good example of AI understanding context occurred last year when Amazon introduced Sidewalk, its vision of what it called a “neighbourhood network” designed to make a person’s devices work better both inside their home and beyond the front door.

In the future, Sidewalk is meant to support a range of experiences from using Sidewalk-enabled devices to help find pets or valuables, to smart security and lighting, to diagnostics for appliances and tools.

There will no doubt be many iterations of Sidewalk and other services like it, and through it all developers, in particular, need to think about not only creating a cool new AI application, but considering the contextual layers required in creating the optimal AI experience for end-users (while tip-toeing the potential ethical minefield).

As it pertains to how end-users will interface with AI, IBM in a posting on its Web site entitled How conversation (with context) will usher in the AI future, points out that in the “past few years, advances in artificial intelligence have captured the public imagination and led to widespread acceptance of AI-infused assistants. But this accelerating pace of innovation comes with increased uncertainty about where the technology is headed and how it will impact society.

“The consensus is that within three to five years, advances in AI will make the conversational capabilities of computers vastly more sophisticated, paving the way for a sea change in computing. And the key lies in helping machines master one critical element for effective conversation – context.”

Context can be defined in many ways, but at the end of the day it is about ensuring that AI can read all of the signs be it the need to understand its environment or behave in appropriate ways depending on the situation. We humans read contextual cues all the time but lump it under what we call ‘common sense’. However, for AI systems and products, common sense isn’t very common at all.

Charles Lee Isbell Jr., Dean of Computing at Georgia Institute of Technology in his interview for this report was asked the question ‘do you believe it’s possible to teach machines common sense and the assumptions we’ve built up as humans?’ “The answer is yes. We can build this and in fact, we must build these kinds of notions. And I think the way we do it is around story. We tell ourselves stories about how something is supposed to happen, and we build up these kinds of data structures and experiences that allow us to generalize from one to the other. What does it have to do with built in assumptions? “Well, there’s a whole bunch of assumptions built into that. The assumption is that other people are like you. And that they are doing the things like you are and that you can predict what they are going to do, because you know what they are going to do. You are fundamentally building this idea that the things I am interacting with are like me and they have the same desires and drives and the same physical limitations. And so on. Now this is a problem. “If you actually don’t have anything in common physically or psychologically or whatever, because you may not even have a fundamental language upon which you can agree about what’s happening, common sense no longer works.” In the digital age, they say that content is king. If so, as we move into a new era of developing systems and products for AI experience, it could be argued that context is queen … and we know who’s the real power behind the throne.

A.I. Around the World

It can sometimes seem like the world’s AI advancements come from experts concentrated in only a few major countries, but AI is a truly global endeavor with amazing talents applying themselves to furthering the field. Here are five examples of organizations driving the future of AI from around the world: